Artificial Intelligence (AI) is often portrayed as a transformative technology capable of altering industries and the way society functions. Terms like Machine Learning (ML) and Deep Learning (DL) are used in discussions about self-driving cars, intelligent assistants, or creative tasks like art generation. However, beneath these high-level concepts lies an often-overlooked foundation: mathematics. Mathematics forms the essential framework that enables AI to operate, much like the submerged portion of an iceberg that supports the visible part.

The AI Iceberg Metaphor: What’s Below the Surface?

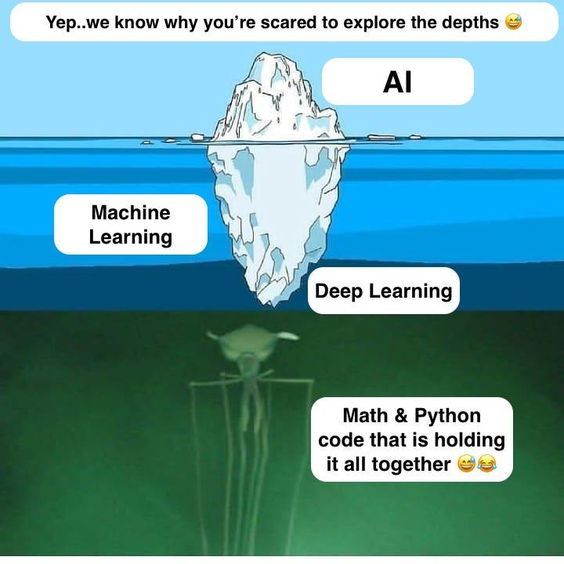

AI, ML, and DL can be visualized as layers of an iceberg. The topmost layer, AI, represents the popular concepts and applications that capture attention. Below it lies ML and DL, which constitute the technologies that enable AI. At the deepest level, mathematics forms the core that supports these technologies. Without mathematics, AI systems would lose the structural foundation needed for their functionality.

In this metaphor, ignoring the mathematical underpinnings is similar to only appreciating the visible part of an iceberg while disregarding the larger, submerged portion. This hidden base provides the stability and structure that keeps the visible part afloat. Likewise, mathematics is what enables AI to function, allowing for model training, data processing, and optimization.

Mathematics: The Bedrock of Machine Learning and Deep Learning

AI models are built on mathematical principles. Whether through data manipulation, decision-making, or optimization, mathematics plays a key role in each process. Different areas of mathematics form the backbone of the AI ecosystem.

Linear Algebra

Linear algebra plays a key role in representing and manipulating data. When an image is fed into a neural network, it is converted into a matrix, a representation made up of rows and columns of numbers. Operations such as rotation, scaling, and feature extraction in the image use linear algebra principles. In deep learning, neural networks perform transformations on data using matrices, vectors, and tensors, where each operation relies on matrix multiplication, vector addition, and other linear algebraic computations.

For example, each layer of a neural network applies a series of transformations to input data using weights and biases, represented as matrices. These operations manipulate input data through matrix multiplication, which enables the model to extract patterns and perform tasks such as classification or regression.

Calculus

Optimization is a critical component of machine learning, where the objective is to minimize an error function, typically by adjusting model parameters. Calculus, especially differential calculus, provides the necessary tools to compute gradients, which indicate the direction and magnitude of change needed to reduce the error. Gradient descent, a commonly used optimization algorithm, employs these gradients to adjust model weights in small steps, improving model accuracy with each iteration.

In deep learning, the backpropagation algorithm relies heavily on calculus to calculate gradients with respect to the model’s parameters. This process enables the model to learn from errors and adjust its weights accordingly. Without calculus, it would be impossible to fine-tune neural networks and improve their predictive capabilities.

Probability and Statistics

Machine learning involves working with data, which is inherently uncertain. Probability and statistics are essential for making decisions based on data that contain noise or randomness. Models that perform classification, regression, or prediction tasks under uncertainty rely on probabilistic methods.

For example, Bayes’ Theorem is used to update the probability of a hypothesis given new evidence. Statistical concepts such as distributions, variance, and hypothesis testing help AI models understand patterns, make predictions, and quantify the level of confidence in their decisions. In particular, probability distributions are used to model the likelihood of various outcomes, while statistical inference helps in drawing conclusions about larger populations based on sample data.

Optimization Theory

Selecting the best parameters for a machine learning model can be viewed as an optimization problem. Optimization theory provides techniques for identifying the parameters that result in the best model performance, given a set of constraints. Convex optimization, in particular, is often used in machine learning to ensure that a global minimum is found when minimizing an objective function.

For instance, the training process of a model involves tuning hyperparameters, such as the learning rate and regularization term. These hyperparameters affect how quickly a model learns and whether it overfits the data. Optimization theory provides strategies to systematically explore different hyperparameter configurations to find the best combination for the task at hand.

Set Theory and Logic

Though less frequently discussed in AI contexts, set theory and formal logic are vital for defining the relationships between data points, algorithms, and decisions. Set theory underpins data structures, enabling operations like unions, intersections, and complements that are necessary for organizing and manipulating large datasets. Formal logic, particularly Boolean algebra, plays a role in decision-making processes and model architecture design.

In neural networks, for example, the logical operations performed by artificial neurons are derived from basic logical functions such as AND, OR, and NOT. These operations allow the network to simulate complex decision-making processes by combining multiple inputs into meaningful outputs.

Why Skipping the Math Won’t Work

It is tempting to approach AI development by focusing solely on coding or leveraging pre-built libraries such as TensorFlow or PyTorch. While these tools provide high-level abstractions for building models with minimal mathematical knowledge, ignoring the underlying mathematics can lead to significant challenges when models fail to perform as expected.

-

Model Failure: When a model underperforms, diagnosing the issue requires a deep understanding of the mathematical principles that govern it. For example, problems with gradient flow, vanishing gradients, or poorly tuned loss functions can lead to suboptimal results, and debugging these issues without a solid mathematical foundation can be difficult.

-

Hyperparameter Tuning: Adjusting a model’s performance often involves changing hyperparameters such as the learning rate or regularization factor. Without a grasp of the underlying optimization and probabilistic concepts, hyperparameter tuning becomes a process of trial and error, often yielding suboptimal results.

-

Algorithm Adaptation: AI is an evolving field, and pre-built solutions may not always fit specific use cases. Customizing models for unique datasets or tasks often requires modifying the underlying algorithms. Without mathematical insight, these customizations are nearly impossible to implement effectively.

Bridging the Gap: Why Math Empowers AI Practitioners

Mathematics does more than facilitate understanding; it equips practitioners to make informed decisions when developing AI systems. There are several ways in which mathematical knowledge empowers AI practitioners:

-

Interpretability: In many applications, especially those involving healthcare or finance, model interpretability is crucial. A solid mathematical foundation provides clarity into how models make decisions, ensuring that their predictions can be explained and trusted.

-

Performance: Fine-tuning model parameters such as learning rates or regularization terms requires an understanding of mathematical principles. Mastery of these principles can significantly improve a model’s performance, both in terms of accuracy and computational efficiency.

-

Innovation: The field of AI is rapidly evolving, and much of its future potential remains unexplored. By understanding the mathematics behind existing models, practitioners are better equipped to develop novel algorithms and push the boundaries of what AI can achieve.

Embracing the Foundations

The rapid growth of AI and machine learning has captured public attention, but understanding their full potential requires delving into the mathematical foundations. While tools and libraries make it possible to work with AI systems without a strong background in mathematics, this comes at the cost of long-term mastery. Embracing mathematics as the foundation of AI enables practitioners to not only understand existing models but also to innovate and create new ones.

Skipping the mathematical groundwork may lead to short-term success, but it limits the ability to solve complex problems and develop more advanced systems. For those serious about mastering AI, a deep dive into mathematics is not just beneficial—it is essential.