The Importance of Data Design, Quality Assurance, and the Urgent Need for Defined Roles in the Data Industry

In today’s data-driven world, we often overlook one of the most critical aspects of any data initiative: data quality. While organizations across industries are investing heavily in advanced analytics, machine learning, and artificial intelligence, many fail to acknowledge that without high-quality data, these investments may fail to deliver meaningful results. The saying “Garbage In, Garbage Out” aptly describes this dilemma—if the input data is flawed, no amount of sophisticated processing can rectify it.

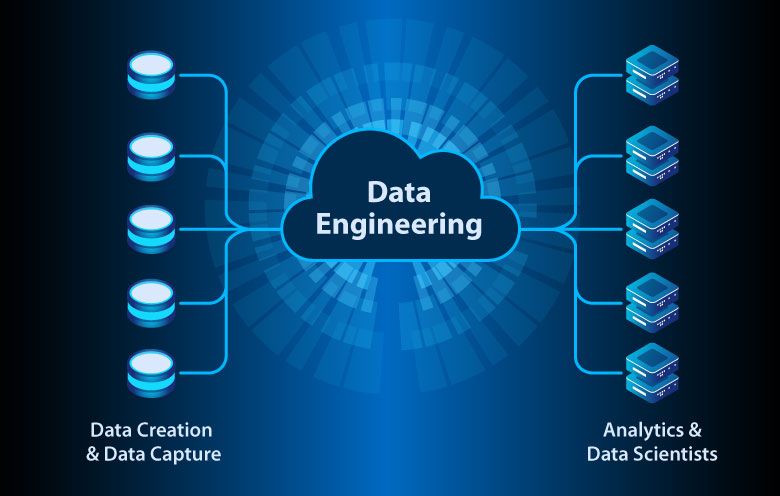

Data quality is an art that requires careful attention from the very start of the data lifecycle. Unfortunately, many organizations focus on making data usable and accessible through data engineering, or turning data into actionable insights through data science, without first ensuring that the data being used is accurate, complete, and reliable. This oversight is a widespread problem, and unless addressed, it will continue to undermine efforts to make data truly valuable.

Data Quality: The Missing Link in the Data Lifecycle

The data lifecycle begins long before a data scientist writes their first line of code. It starts with data design—how data is structured, collected, and curated. Data engineers, researchers, and UX designers often play a role here, but there is no standardized responsibility for overseeing the design of high-quality datasets. As a result, data quality is often treated as a secondary concern, even though it’s foundational to the success of any data project.

Without structured processes and defined roles, data quality becomes the victim of neglect, resulting in an “everybody’s job” mentality that inevitably leads to nobody taking full responsibility. In contrast to the high visibility of data scientists and engineers, the role of ensuring data quality is obscure and underappreciated.

The Importance of High-Quality Data

Why does data quality matter so much? High-quality data allows organizations to make informed decisions, detect trends, and predict future outcomes with confidence. Poor-quality data, on the other hand, can lead to erroneous conclusions, faulty business strategies, and wasted resources.

Take, for instance, a financial institution that relies on transaction data to detect fraudulent activity. If the data is incomplete, mislabeled, or contains duplicate entries, the models built to detect fraud will either fail to identify legitimate threats or incorrectly flag harmless transactions. In a business where accuracy is paramount, poor data quality could cost millions of dollars or damage customer trust.

High-quality data has several key attributes:

- Accuracy: The data correctly represents the real-world event or entity it describes.

- Completeness: All required data is available and accounted for.

- Consistency: Data is consistent across different systems or within a dataset.

- Timeliness: Data is up to date and available when needed.

- Reliability: Data remains dependable and correct over time.

Organizations that focus on these attributes from the start can build a data ecosystem that produces actionable, reliable insights.

Data Quality vs. Data Quantity: A Common Misconception

A common pitfall for organizations is to prioritize collecting vast amounts of data over ensuring the quality of the data they gather. With the advent of big data technologies, it’s easy to assume that more data is always better. However, this is a dangerous misconception.

Collecting large volumes of data that are riddled with errors or inconsistencies does not add value—it amplifies the noise. In contrast, high-quality data—data that is accurate, clean, and meaningful—can drive better decisions, even if it is limited in quantity. For instance, a smaller, well-maintained dataset often yields better insights than a sprawling dataset full of anomalies and missing values.

The Lack of a Clear Role: Who Owns Data Quality?

One of the greatest challenges in ensuring data quality is the lack of clear ownership. As data projects grow in scale and complexity, multiple stakeholders are involved, including data engineers, scientists, analysts, product managers, and even UX designers. However, no specific role is universally tasked with overseeing the quality of data across its entire lifecycle.

This absence of a dedicated data quality professional leads to confusion. Who is responsible for verifying data accuracy before it enters production systems? Who ensures that the data complies with governance and privacy regulations? The answer varies from organization to organization, often leading to fragmented processes and unchecked errors.

There is a growing need for a dedicated role—call it a data quality engineer, data curator, or data steward—who is responsible for the integrity of data from its initial collection to its final analysis. This role would include responsibilities such as:

- Designing data collection processes that minimize the introduction of errors.

- Implementing rigorous data validation checks at every stage of the data pipeline.

- Ensuring compliance with data governance and privacy regulations.

- Collaborating with data engineers and scientists to maintain data consistency and reliability.

Without a clear owner, data quality issues can fester, becoming systemic problems that are harder to address over time.

Establishing a Framework for Data Quality

To mitigate these issues, organizations should establish a formal framework for managing data quality across its entire lifecycle. This framework should involve multiple stakeholders and be supported by well-defined policies and processes.

1. Data Collection Policies

Data collection policies define how, why, and what data is collected. These policies ensure that data is gathered in a structured manner, reducing the likelihood of errors. For example, policies might specify acceptable formats for entering data, or they may enforce data validation rules during entry to catch mistakes early on.

Clear ownership should be established over this process to ensure consistency and accountability.

2. Data Usage and Access Policies

Once collected, data should be used responsibly. Data usage policies ensure that only authorized personnel can access the data and that it is used in accordance with ethical and legal guidelines. Misuse of data—whether intentional or accidental—can lead to serious consequences, including breaches of privacy or violations of regulations such as GDPR.

Access policies ensure that sensitive data is protected, with strict controls over who can view, edit, or share it.

3. Data Retention and Security Policies

Organizations must decide how long data is retained and how it is stored securely. Retaining data indefinitely without a clear purpose introduces security risks and can lead to privacy violations. Data retention policies ensure that data is stored only as long as it is needed for legitimate business purposes, after which it should be securely archived or deleted.

Security policies should also dictate encryption practices, user authentication, and other controls to prevent unauthorized access.

4. Data Governance and Quality Assurance

Data governance goes beyond data collection and storage; it defines how data is managed and maintained over time. This involves implementing processes that continuously monitor and improve data quality. Data governance should also establish accountability, with clear roles for individuals responsible for maintaining data standards.

Governance policies also ensure data consistency across different platforms and departments. Without this, datasets might conflict with each other, leading to inaccurate reporting and decision-making.

Case Study: Improving Data Quality in a Retail Company

Consider a retail company that collects transaction data from its online store. The company relies on this data to understand customer behavior, optimize product offerings, and improve sales. However, the team soon realizes that their data is incomplete and inconsistent. Some transactions are missing product details, others have incorrect pricing information, and duplicate entries are causing issues.

To resolve these problems, the company assembles a team with three key roles:

Data Engineer:

The data engineer plays a crucial role in ensuring that data is accurate, consistent, and fit for analysis. In this context, the data engineer is responsible for building an automated data pipeline that streamlines the flow of transaction data from the source (such as a database or data warehouse) to its final destination, where it can be analyzed and used for business insights.

This pipeline not only automates the ingestion of data but also performs data cleansing and validation tasks, which are essential for maintaining data quality.

Key Responsibilities of the Data Engineer:

-

Data Ingestion: The first step in the pipeline involves setting up mechanisms to automatically pull data from various sources. For a retail company, this could include databases storing transaction records, APIs capturing real-time sales data, or external systems such as point-of-sale terminals. The data engineer ensures that this data is ingested continuously, without manual intervention, allowing for seamless and real-time updates.

-

Data Profiling: Before any cleaning or validation occurs, the data engineer performs data profiling. This process involves analyzing the incoming data to understand its structure, contents, and quality. Through profiling, the data engineer identifies issues such as missing values, outliers, incorrect data types, or inconsistencies in formats. For example, transaction dates might be recorded inconsistently across different sources, or some product entries might have missing prices.

Data profiling helps the data engineer gain a comprehensive view of the dataset’s overall health, allowing them to spot patterns that may require correction later in the pipeline.

-

Data Cleansing: Once anomalies are identified, the data engineer applies data cleansing techniques to rectify these issues. This includes:

- Handling missing data: The engineer can either remove rows with missing values or, if appropriate, fill in the gaps using techniques like interpolation or mean substitution.

- Standardizing formats: Dates, product codes, and customer IDs may be converted to a standard format to ensure consistency across the dataset.

- Removing duplicates: If a customer transaction is recorded multiple times due to system errors or integration issues, the engineer removes duplicate entries to maintain the integrity of the data.

- Correcting errors: Errors such as incorrectly formatted data (e.g., text in numeric fields) are automatically flagged and corrected, or moved to an exception table for further investigation.

-

Data Validation Rules: To ensure the consistency and reliability of the data, the engineer establishes validation rules that enforce data integrity. These rules are designed to catch data that falls outside expected ranges or violates business logic. For instance:

- Transaction amounts should always be positive and fall within a reasonable range based on the business’s historical data.

- Product codes must match those in the company’s product catalog, ensuring that no unrecognized or incorrect products are recorded.

- Dates of transactions must be valid and fall within expected time frames (e.g., no future transactions or transactions outside business hours).

These validation checks are automated within the pipeline, ensuring that only high-quality data passes through for analysis. Any data that fails validation is flagged and routed to a separate process where it can be corrected or reviewed manually.

-

Error Logging and Monitoring: A robust pipeline includes mechanisms for monitoring data quality in real time. The data engineer sets up error logging tools that capture any discrepancies or validation failures during the ingestion process. These logs are vital for auditing purposes and for tracking down recurring data issues.

Additionally, the engineer implements automated alerts that notify the team of any critical errors in the data pipeline, such as data sources going offline, missing batches of data, or spikes in validation failures. This ensures that data quality issues can be addressed promptly before they affect downstream processes.

-

Scalability and Efficiency: As the volume of data grows, it is important for the pipeline to scale efficiently. The data engineer optimizes the pipeline to handle larger datasets without compromising on performance. This involves ensuring that the pipeline can process transactions in near real-time while maintaining its cleansing and validation processes. Technologies like distributed computing and cloud-based data pipelines can be utilized to ensure scalability and minimize latency.

-

Data Integration and Transformation: In addition to cleansing and validating data, the data engineer may also need to integrate data from multiple sources and transform it into a unified format that aligns with business requirements. This could involve merging transaction data with customer data to create a comprehensive view of customer behavior or joining external datasets, such as demographic information, to enhance the analysis.

The transformation process may also involve creating new variables or features that can be used by data scientists for modeling. For instance, the engineer might compute aggregated statistics (e.g., average transaction value per customer) or time-based features (e.g., the number of purchases a customer makes within a specific time frame).

-

Collaboration with Other Teams: Finally, the data engineer works closely with data scientists and analysts to ensure that the cleaned, validated data is fit for analysis. They collaborate to understand the specific requirements of the analysis, ensuring that the pipeline is tailored to produce data that meets the needs of business intelligence and advanced analytics teams.

By automating the data pipeline, the data engineer ensures that the organization can consistently rely on accurate, clean, and high-quality data. This forms the backbone of any data-driven strategy, enabling the company to make informed decisions, optimize operations, and better serve customers.

Data Scientist:

The data scientist plays a pivotal role in turning clean, validated data into actionable insights that can drive business decisions. By working closely with the data engineer, the data scientist ensures that the data is not only accurate but also well-suited for in-depth analysis. Their primary responsibility is to analyze the cleaned data, identify patterns, and leverage statistical and machine learning models to provide insights that help optimize operations and strategies.

Key Responsibilities of the Data Scientist:

-

Exploratory Data Analysis (EDA): Once the data has been cleaned and validated, the first task of the data scientist is to conduct exploratory data analysis (EDA). EDA is crucial for understanding the structure, trends, and relationships within the data. By using statistical tools and visualization techniques, such as histograms, scatter plots, and correlation matrices, the data scientist uncovers the underlying distribution of data, highlights outliers, and identifies initial patterns or anomalies.

For instance, in the context of a retail company, the data scientist might explore customer purchasing behavior to answer questions like:

- What products are most frequently purchased together?

- Are there patterns in the time of day or week when certain items are sold?

- What is the distribution of transaction amounts, and are there any unusually high or low transactions?

EDA helps the data scientist generate hypotheses and lay the groundwork for more sophisticated modeling later on.

-

Feature Engineering: Feature engineering is the process of creating new variables (features) from the existing dataset to improve the predictive power of machine learning models. A well-engineered feature can dramatically enhance the performance of models by better representing the underlying relationships in the data.

In a retail scenario, a data scientist might:

- Calculate the average purchase frequency for each customer.

- Create time-based features such as the recency of a customer’s last purchase or seasonal buying trends.

- Segment customers based on their purchase history, creating a customer lifetime value (CLV) feature that estimates the revenue each customer will generate over time.

These engineered features allow the data scientist to capture more nuanced insights into customer behavior, improving the accuracy and relevance of the resulting models.

-

Model Building and Machine Learning: The core responsibility of the data scientist is to build and apply machine learning models to predict future behaviors, trends, or outcomes. Depending on the problem at hand, the data scientist may choose from a variety of models such as:

- Classification models to categorize customers or transactions (e.g., predicting whether a customer will make a repeat purchase).

- Regression models to predict continuous outcomes (e.g., estimating the amount a customer will spend in the next month).

- Clustering algorithms to group customers based on purchasing behaviors (e.g., segmenting customers into high-value, occasional, or one-time buyers).

In the retail example, predictive models can be used to forecast demand, optimize inventory, and personalize marketing strategies. For instance, a recommendation system could be built to suggest products to customers based on their past purchases, or a predictive model could estimate future sales of specific products, allowing the company to better manage stock levels.

Supervised learning algorithms like decision trees, random forests, and logistic regression may be employed when labeled data is available. Meanwhile, unsupervised learning techniques, such as K-means clustering or principal component analysis (PCA), are used to discover hidden patterns in the data without explicit labels.

-

Evaluating Model Performance: Once the models are built, the data scientist must evaluate their performance to ensure that they are accurate and reliable. This involves splitting the dataset into training and test sets and applying various evaluation metrics such as accuracy, precision, recall, F1-score, or mean squared error (MSE) depending on the model type.

In a retail scenario, the data scientist might evaluate how well a recommendation model predicts products that customers are likely to purchase or assess the accuracy of sales forecasts. They can also conduct cross-validation to ensure that the model generalizes well to new, unseen data.

If the models do not perform as expected, the data scientist might return to the feature engineering stage or experiment with different model architectures or tuning hyperparameters (e.g., learning rate, depth of decision trees) to improve performance.

-

A/B Testing and Experimentation: Beyond predictive modeling, the data scientist is often involved in A/B testing and experimentation to validate business decisions. For instance, if a retail company wants to test a new marketing strategy or pricing model, the data scientist helps design experiments to evaluate the effectiveness of the changes.

In an A/B test, the company might segment customers into two groups: one group receives a new promotional offer, while the other group does not. The data scientist would analyze the results to determine whether the promotion led to a statistically significant increase in sales, customer retention, or other business metrics.

A/B testing ensures that decisions are data-driven and supported by evidence, minimizing the risk of implementing strategies that might not work as intended.

-

Deploying Models to Production: Once a model is deemed successful, it needs to be deployed to production so that it can provide real-time insights or make automated decisions. This might involve integrating the model into the company’s existing infrastructure, such as embedding it into a recommendation engine for an e-commerce platform or feeding it into a demand forecasting system to automatically adjust inventory levels.

The data scientist works closely with the data engineer to ensure that the models are deployed efficiently and can handle live data streams. This process includes setting up pipelines for continuous retraining of the model as new data becomes available, ensuring that the models remain accurate over time.

-

Collaboration with Stakeholders: A critical aspect of the data scientist’s role is collaboration with business stakeholders. The data scientist must communicate complex findings to non-technical team members, translating raw data into business insights that drive decision-making. This involves creating clear, understandable visualizations and reports that explain model results and their implications for the business.

For instance, after building a predictive model for customer behavior, the data scientist might present a report showing how the model predicts future purchase trends. This could guide the marketing team in tailoring campaigns to specific customer segments or assist the operations team in planning inventory for the upcoming season.

By acting as a bridge between the data and business strategy, the data scientist ensures that their technical expertise translates into tangible business value.

-

Continuous Model Monitoring and Maintenance: Once deployed, models require continuous monitoring to ensure they perform as expected over time. Market conditions, customer behavior, or other external factors can change, potentially leading to model drift, where the model’s predictions become less accurate over time.

The data scientist sets up systems to monitor key performance indicators (KPIs) for the model, such as accuracy or prediction error. If these metrics start to decline, the data scientist revisits the model to either retrain it with new data or adjust the features and parameters to account for changes in the environment. This ensures that the models remain effective and reliable in dynamic business conditions.

Impact of the Data Scientist’s Work

Through this combination of exploratory analysis, feature engineering, model building, and collaboration with stakeholders, the data scientist provides critical insights that inform strategic decisions. In the retail example, their models can help the company:

- Optimize inventory by predicting which products will be in high demand.

- Personalize marketing by targeting specific customer segments with tailored promotions.

- Enhance customer loyalty by identifying high-value customers and offering incentives to retain them.

- Increase sales through recommendation systems that suggest complementary products based on past purchases.

By leveraging data effectively, the data scientist ensures that the company not only understands its customers better but also makes smarter, data-driven decisions that improve profitability and operational efficiency.

Data Steward:

The data steward plays a critical role in managing the governance, integrity, and security of data across an organization. Responsible for ensuring that all data complies with internal policies, industry standards, and external privacy regulations, the data steward acts as the gatekeeper of data quality and governance. They work closely with both the data engineer and the data scientist to ensure that data remains trustworthy, secure, and compliant throughout its lifecycle. By establishing robust data governance frameworks, the data steward helps organizations maintain control over how data is accessed, used, and stored.

Key Responsibilities of the Data Steward:

-

Data Governance Framework Development: The data steward is responsible for developing and enforcing a data governance framework that dictates how data is managed across the organization. This framework outlines policies and procedures for data collection, usage, storage, and disposal. A strong governance framework ensures that data management aligns with the organization’s objectives while remaining compliant with laws and regulations like the General Data Protection Regulation (GDPR), California Consumer Privacy Act (CCPA), or industry-specific rules such as HIPAA in healthcare.

This framework covers:

- Data ownership: Defining who owns specific datasets within the organization.

- Data access: Setting policies on who can access, modify, or distribute data, based on role-based permissions.

- Data retention: Establishing rules on how long data should be retained and when it should be archived or deleted.

- Data privacy: Ensuring that sensitive data is protected and used in compliance with privacy regulations.

-

Ensuring Compliance with Privacy Regulations: A major aspect of the data steward’s role is ensuring compliance with privacy laws and regulations that govern how personal and sensitive information is handled. This includes overseeing how data containing personally identifiable information (PII) or confidential customer records is managed, shared, and protected.

The data steward must:

- Monitor regulatory changes: Stay up to date with evolving privacy laws and ensure that the company’s data practices are adjusted accordingly.

- Anonymize and encrypt sensitive data: Implement techniques like data anonymization, pseudonymization, and encryption to protect customer information and prevent unauthorized access.

- Oversee consent management: Ensure that customers have provided consent for their data to be collected and used, and that the organization adheres to consent withdrawal procedures.

For example, if the retail company collects customer transaction data that includes personal details like addresses or payment information, the data steward ensures that all privacy protocols are followed, and that any misuse or unauthorized access is prevented.

-

Defining Data Access and Usage Policies: The data steward collaborates with the data engineer and the data scientist to define data access and usage policies. These policies dictate who within the organization can access specific datasets and how that data can be used. The steward ensures that only authorized personnel can view or manipulate sensitive data, thereby preventing unauthorized access or accidental data breaches.

These policies often include:

- Role-based access control (RBAC): Ensuring that users only have access to data relevant to their role within the organization. For example, marketing personnel may only have access to aggregated customer data, while financial analysts can view more detailed financial transaction records.

- Logging and auditing access: Keeping track of who accesses which data and for what purpose. The data steward implements logging systems that audit access to sensitive data, ensuring transparency and accountability.

- Data usage guidelines: Establishing best practices for how data should be used within the organization to drive business insights while maintaining data quality and security.

-

Monitoring Data Quality and Integrity: The data steward plays a hands-on role in maintaining the quality and integrity of the organization’s data. Working closely with the data engineer, the steward performs regular audits of the data pipeline to ensure that data is accurate, consistent, and complete. This includes monitoring for issues such as missing data, incorrect formatting, or duplicate records.

Data quality monitoring involves:

- Regular validation checks: Setting up automated processes to validate data at various stages of the pipeline, ensuring it meets predefined quality standards.

- Error reporting: Establishing systems that automatically flag discrepancies or data quality issues for further investigation and resolution.

- Data lineage tracking: Documenting the flow of data from its point of origin to its final destination, ensuring traceability and understanding of any transformations or manipulations the data undergoes.

For example, if there is an inconsistency between sales data from different regions, the data steward works with the data engineer to trace the issue back to its source and correct it.

-

Data Security and Risk Management: Data security is a core responsibility of the data steward, who ensures that all sensitive information is protected from unauthorized access, breaches, or cyber threats. This involves establishing data security protocols and collaborating with IT and cybersecurity teams to mitigate risks. The data steward is responsible for creating and enforcing policies around:

- Encryption: Ensuring that all sensitive data, such as customer personal information or payment details, is encrypted both at rest and in transit.

- Access control: Implementing multi-factor authentication (MFA) and strong password policies to prevent unauthorized access to data systems.

- Disaster recovery and backup: Working with IT to establish data backup protocols and disaster recovery plans that ensure data availability in case of system failures or security breaches.

The data steward also regularly evaluates risks related to data security, identifying potential vulnerabilities and implementing measures to reduce the likelihood of breaches.

-

Auditing and Compliance Reporting: To maintain transparency and ensure ongoing compliance with legal requirements, the data steward is responsible for conducting regular audits of the organization’s data practices. These audits assess the organization’s adherence to privacy laws, data access policies, and security protocols.

If a regulatory agency requires an audit or inspection, the data steward is the point of contact, ensuring that the organization can provide comprehensive documentation on how it handles, stores, and processes data. Compliance reporting also involves generating reports that detail data access logs, any identified data breaches, and how they were mitigated.

For instance, if the company is required to demonstrate GDPR compliance, the data steward would provide records that show how customer data was processed, anonymized, and protected in line with the regulation.

-

Collaboration with Data Teams: The data steward acts as a liaison between various teams that handle data, including the data engineer, data scientist, and IT teams. By facilitating communication between these teams, the data steward ensures that data governance principles are applied consistently throughout the data lifecycle.

For example, the data steward may work with the data engineer to ensure that new datasets are properly documented and that data pipelines are equipped to handle sensitive information securely. They might also collaborate with the data scientist to ensure that data privacy requirements are integrated into machine learning models that process customer information.

-

Continuous Improvement of Data Governance Policies: The role of the data steward is not static; it involves continuously refining data governance policies to adapt to new challenges and opportunities. As the organization grows and its data needs evolve, the data steward is responsible for updating governance frameworks to accommodate changes in technology, business objectives, and regulatory environments.

This might include introducing new tools for data management, refining data retention policies, or enhancing data security protocols as new cyber threats emerge. By keeping governance policies up to date, the data steward ensures that the organization remains agile and prepared for future data challenges.

Impact of the Data Steward’s Work

Through their comprehensive oversight of data governance, privacy, and security, the data steward ensures that the organization’s data remains compliant, secure, and high-quality. This has several significant benefits:

- Improved decision-making: High-quality, well-governed data allows for more accurate analyses and better business decisions.

- Compliance with regulations: By ensuring compliance with privacy laws like GDPR and CCPA, the organization avoids legal penalties and maintains customer trust.

- Enhanced security: Strong data security practices reduce the risk of data breaches, protecting both the organization and its customers.

- Trustworthy data: By ensuring data integrity, the data steward helps build confidence in the organization’s data, allowing for more reliable predictions and analyses.

Ultimately, the data steward’s efforts contribute to a data environment that fosters responsible data use and enhances organizational efficiency. This ensures that the company can leverage data for strategic insights while safeguarding it from risks, thus improving overall customer satisfaction and driving business success.

Conclusion: A Call for Data Quality Leadership

The path to successful data-driven decision-making begins with high-quality data. Yet, without proper attention to data quality and the roles needed to oversee it, even the most advanced analytics and machine learning initiatives will fall short.

Organizations need to stop treating data quality as an afterthought and instead embrace it as a critical component of their data strategies. By establishing clear roles and responsibilities—whether through data stewards, curators, or quality engineers—and implementing structured frameworks for data governance, companies can ensure the accuracy, completeness, and reliability of their data, setting the stage for meaningful and actionable insights.

As the demand for high-quality data continues to grow, so too must the industry’s commitment to recognizing and investing in the unseen art of data quality.