Exploring Classic Linear Programming (LP) Problems and Scalable Solutions: A Deep Dive into PDLP

Introduction

Classic linear programming (LP) problems are some of the most foundational in computer science and operations research. Since its inception, LP has been critical in solving optimization problems across industries such as manufacturing, logistics, finance, networking, and more. As a cornerstone of mathematical programming, LP has significantly influenced the development of today’s sophisticated modeling frameworks and algorithmic approaches for data-driven decision making.

Since the 1940s, LP techniques have been refined and adapted to fit the ever-increasing complexity and size of the optimization problems faced by modern industries. The most prominent and traditional LP solvers are built around Dantzig’s Simplex Method and interior-point methods. Despite their efficacy, these solvers face substantial challenges when scaling to large, complex instances due to memory and computational constraints. In response, first-order methods (FOMs) have emerged as an alternative approach, providing a scalable solution to large-scale LP problems by reducing the reliance on resource-heavy matrix factorization techniques.

This article delves into the traditional methods of solving LP, the challenges they face in scaling, and introduces PDLP (Primal-Dual Hybrid Gradient Enhanced for LP), a modern solver that offers a scalable alternative to traditional methods by leveraging advancements in FOMs. PDLP was co-awarded the Beale-Orchard Hays Prize in 2024 for its innovative approach to computational optimization, marking a significant step forward in the field.

A Brief Overview of Linear Programming (LP)

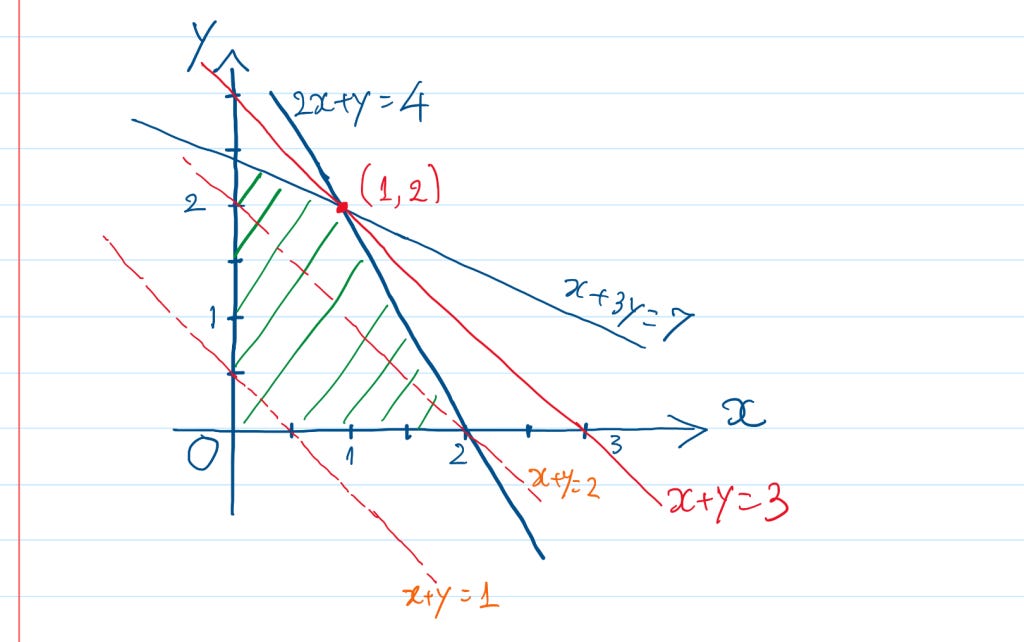

At its core, linear programming is a method for optimizing a linear objective function, subject to a set of linear equality and inequality constraints. LP models consist of:

- Objective Function: A linear function representing the goal to maximize or minimize (e.g., cost, profit, resource usage).

- Constraints: A set of linear inequalities or equalities that define the feasible region.

- Decision Variables: Variables whose values are adjusted to optimize the objective function while satisfying all constraints.

Mathematically, an LP problem can be formulated as:

\[\text{Minimize or Maximize } \mathbf{c}^T \mathbf{x}\]Subject to:

\[A \mathbf{x} \leq \mathbf{b}, \quad \mathbf{x} \geq 0\]Where:

- \(\mathbf{x}\) is the vector of decision variables,

- \(\mathbf{c}\) is the vector of coefficients for the objective function,

- \(A\) is the matrix representing the constraints,

- \(\mathbf{b}\) is the vector of constants for the inequality constraints.

Applications of Linear Programming

LP has a wide range of applications across various sectors of the global economy. Some of the prominent use cases include:

- Manufacturing: LP models are used to optimize production schedules, inventory management, and raw material usage to minimize costs and maximize output.

- Networking: LP helps optimize the flow of data across networks, determining the most efficient routing and bandwidth allocation.

- Logistics: Companies use LP to optimize transportation routes, reduce fuel costs, and improve delivery times.

- Finance: In portfolio optimization, LP helps investors allocate assets to minimize risk and maximize returns.

- Energy: LP is applied in power generation and distribution to optimize resource usage while minimizing operational costs.

In each of these applications, LP has proven to be an invaluable tool for decision-makers looking to optimize complex systems.

Traditional Methods for Solving LP

Two of the most widely-used traditional methods for solving linear programming problems are the Simplex Method and interior-point methods.

The Simplex Method

First introduced by George Dantzig in 1947, the Simplex Method is an iterative algorithm that efficiently moves along the edges of the feasible region defined by the constraints of the LP problem. By following these edges, it identifies the optimal vertex (corner point) where the objective function is maximized or minimized.

While the Simplex Method is incredibly efficient for many LP problems, it has certain limitations:

- Memory Overhead: The Simplex Method relies on LU factorization for solving systems of linear equations, which introduces significant memory demands as problem sizes grow.

- Computational Complexity: Despite being fast in practice, Simplex can degrade to exponential time in certain worst-case scenarios, though this is rare.

Interior-Point Methods

Developed in the 1980s, interior-point methods revolutionized the field of optimization by providing an alternative approach to LP. These methods work by traversing the interior of the feasible region rather than the edges, converging towards the optimal solution more quickly for certain types of problems. The most common interior-point method is Karmarkar’s Algorithm.

However, interior-point methods also have limitations:

- Cholesky Factorization: Like the Simplex Method, interior-point methods rely on matrix factorizations (specifically Cholesky decomposition) to solve linear systems, which also requires significant memory.

- Sequential Processing: Interior-point methods are difficult to parallelize due to the inherently sequential nature of their matrix factorizations, limiting their compatibility with modern parallel computing architectures such as GPUs.

Challenges in Scaling Traditional LP Methods

As LP problems grow larger and more complex, traditional methods face significant challenges:

-

Memory Overflows: The reliance on matrix factorizations in both the Simplex and interior-point methods leads to excessive memory consumption. For large-scale problems, the memory required for these factorizations can exceed the available capacity of most machines.

-

Sequential Operations: Both methods are difficult to parallelize due to their dependence on sequential matrix factorizations. This makes it challenging to leverage modern computing architectures such as GPUs and distributed systems, which excel at parallel computations.

-

Computational Complexity: For very large problems, both the Simplex and interior-point methods require substantial computational resources, making them less efficient for real-time or near-real-time decision-making.

Given these challenges, alternative methods that can efficiently handle large-scale LP problems are essential. This is where first-order methods (FOMs) come into play.

First-Order Methods (FOMs) for Linear Programming

First-order methods represent a family of optimization algorithms that rely on gradient-based approaches to iteratively update their solutions. Unlike the Simplex and interior-point methods, FOMs do not rely on matrix factorization. Instead, they use matrix-vector multiplications, which are computationally simpler and require less memory. This makes FOMs ideal for handling large-scale LP problems where memory and computational efficiency are critical.

Key Advantages of FOMs

-

Memory Efficiency: FOMs require only the storage of the LP instance itself, without the need for additional memory to store matrix factorizations. This makes them highly scalable for large problems.

-

Parallel Computation: FOMs are well-suited to modern computational platforms, such as GPUs and distributed systems, as matrix-vector multiplications can be easily parallelized.

-

Scalability: FOMs have been adapted for large-scale machine learning and deep learning tasks, making them highly efficient for optimization problems that involve large datasets or complex models.

Recent advancements in FOMs for LP have led to the development of new solvers capable of addressing the limitations of traditional methods. One such solver is PDLP.

Introducing PDLP: A Scalable Solver for Large-Scale LP Problems

PDLP (Primal-Dual Hybrid Gradient Enhanced for LP) is a first-order method (FOM)-based LP solver designed to tackle the challenges associated with large-scale linear programming problems. Developed by a team of researchers and engineers at Google, PDLP was created to leverage the strengths of FOMs and overcome the computational bottlenecks of traditional LP solvers.

How PDLP Works

At its core, PDLP uses a primal-dual hybrid gradient method, which is a first-order optimization algorithm designed for large-scale LP problems. The key innovation behind PDLP is its reliance on matrix-vector multiplications rather than matrix factorizations. This drastically reduces memory requirements and makes the solver more compatible with modern computing platforms such as GPUs and distributed systems.

Key Features of PDLP:

- Memory Efficiency: PDLP requires significantly less memory than traditional LP solvers, as it avoids matrix factorizations.

- Scalability: PDLP is designed to scale up efficiently to large LP instances, making it suitable for real-world applications in fields like logistics, networking, and machine learning.

- Modern Computing Compatibility: By using matrix-vector multiplications, PDLP is optimized for parallel processing on GPUs and distributed systems, enabling faster computation times for large problems.

Development and Recognition

PDLP has been in development since 2018 and was open-sourced as part of Google’s OR-Tools. Over the past few years, it has undergone extensive testing and refinement, culminating in its co-awarding of the prestigious Beale-Orchard Hays Prize at the International Symposium of Mathematical Programming in 2024. This award is one of the highest honors in the field of computational optimization, recognizing groundbreaking contributions to the development of optimization algorithms.

LP and First-Order Methods for LP: A Comparison

Traditional LP Solvers

| Method | Memory Usage | Computation Speed | Parallelization | Scalability |

|---|---|---|---|---|

| Simplex Method | High (due to LU factorization) | Moderate (exponential in worst-case) | Low (sequential operations) | Moderate |

| Interior-Point Method | High (due to Cholesky factorization) | Fast (polynomial time) | Low (sequential operations) | Moderate |

PDLP and First-Order Methods

| Method | Memory Usage | Computation Speed | Parallelization | Scalability |

|---|---|---|---|---|

| PDLP | Low (no matrix factorization) | Fast (matrix-vector multiplications) | High (parallelizable) | High (scalable for large problems) |

Applications of PDLP in Industry

Given its scalability and efficiency, PDLP is well-suited for a wide range of industrial applications:

-

Supply Chain Optimization: PDLP can be used to optimize complex supply chains, where large-scale LP problems arise in areas such as transportation, inventory management, and logistics. Its ability to handle large datasets and its compatibility with distributed systems make it an ideal choice for global supply chains.

-

Telecommunications and Networking: In the networking industry, PDLP can be applied to optimize bandwidth allocation, network routing, and resource distribution. Its parallel processing capabilities enable real-time optimization in large, dynamic networks.

-

Finance and Portfolio Optimization: PDLP is well-suited for solving LP problems in portfolio optimization, where financial institutions seek to allocate assets efficiently while managing risk. The solver’s ability to scale makes it ideal for optimizing large portfolios with many variables.

-

Machine Learning and AI: LP problems often arise in machine learning, particularly in tasks such as support vector machines (SVMs) and constrained optimization. PDLP’s scalability and efficiency make it a valuable tool for solving these problems in high-dimensional spaces.

Future Directions and Challenges

While PDLP offers significant advantages over traditional LP solvers, there are still challenges and areas for further research. Some of the key challenges include:

-

Algorithmic Refinement: Although PDLP is highly efficient, further research is needed to refine the underlying algorithms to improve convergence rates and reduce computation times for specific problem types.

-

Integration with AI and ML Frameworks: As data-driven decision-making becomes more prevalent, integrating PDLP with popular machine learning frameworks like TensorFlow and PyTorch will be essential for widespread adoption in AI-driven industries.

-

Expanding Applications: While PDLP has shown promise in traditional LP applications, expanding its use to non-linear programming (NLP) or mixed-integer programming (MIP) could open new avenues for optimization in more complex scenarios.

Conclusion

Linear programming continues to be a vital tool in solving complex optimization problems across various industries. While traditional methods like the Simplex and interior-point methods have served the field well, they face significant challenges in scaling to large, modern problems. First-order methods (FOMs), and specifically PDLP, offer a scalable and efficient alternative for solving large-scale LP problems. By leveraging matrix-vector multiplications and modern computational architectures such as GPUs and distributed systems, PDLP represents the future of linear programming solvers.

As the demands on computational optimization grow, solvers like PDLP will play an increasingly important role in tackling the challenges of big data, real-time decision-making, and global optimization.